Introduction

These are personal notes in my attempt to understand what the General Theory of Information tells us about how we make sense of our behaviors and how we relate to each other including ourselves (self-reflection), the material universe we encounter, and the intelligent machinery we build. It is work in progress going through the discovery, reflection, application, and sharing process. As I write these notes to share, I am discovering new areas that I need to explore and will repeat the learning process.

The Thesis, Antithesis, and the Synthesis

Jazz allows the interplay of structure and freedom through three dialectical stages of development: a thesis, giving rise to its reaction; an antithesis, which contradicts or negates the thesis; and finally, the tension between the two being resolved using a synthesis. We can say that our understanding of artificial intelligence (AI) we implement using digital computers, and natural intelligence (exhibited by living beings) is going through the same stages of development.

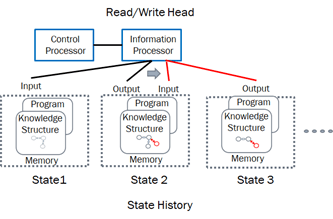

The thesis began with the building of a digital computer. Alan Turing, in addition to giving us the Turing Machine from his observation of how humans used numbers and operations on them, also discussed unorganized A-type machines. His 1948 paper “Intelligent Machinery” gives an early description of the artificial neural networks used to simulate neurons today. His paper was not published until 1968 – years after his death – in part because his supervisor at the National Physical Laboratory, Charles Galton Darwin, described it as a “schoolboy essay.”

While in 1943 McCulloch and Pitts[1], proposed mimicking the functionality of a biological neuron, Turing’s 1948 paper discusses the teaching of machines as this summary says from his 1948 paper. “The possible ways in which machinery might be made to show intelligent behavior are discussed. The analogy with human brain is used as a guiding principle. It is pointed out that the potentialities of the human intelligence can only be realized if suitable education is provided. The investigation mainly centres round an analogous technique teaching process applied to machines. The idea of an unorganized machine is defined, and it is suggested that the infant human cortex is of this nature. Simple examples of such machines are given, and their education by means of rewards and punishment is discussed. In one case, the education process is carried through until the organization is similar to that of an ACE”

Here ACE refers to the Automatic Computing Machine which was a British early electronic serial stored program computer designed by him. Current-generation digital computers are stored program computers that use sequences of symbols to represent data containing information about a system and programs that operate on the information to create knowledge of how the state of the system changes when certain events represented in the program change the behavior of various components or entities that are interacting with each other in the system. The machines thus are taught about human knowledge of how various systems they observe behave in the digital notation using sequences of symbols.

It is interesting to see his vision of the thinking machine. “One way of setting about our task of building a ‘thinking machine’ would be to take a man as a whole and try to replace all the parts of him by machinery. He would include television cameras microphones, loudspeakers, wheels and ‘handling servomechanisms’ as well as some sort of ‘electronic brain’. This would of course be a tremendous undertaking. The object if produced by present techniques would be of immense size, even if the ‘brain’ part were stationary and controlled the body from a distance. In order that the machine would have a chance of finding things out for itself it should be allowed to roam the countryside, and the danger to the ordinary citizen would be serious. However even when the facilities mentioned above were provided, the creature would still have no contact with food, sex, sport and many other things of interest to the human being. Thus although this method is probably the ‘sure’ way of producing a thinking machine it seems to be altogether too slow and impracticable.”

Perhaps this paragraph prompted the remark about a schoolboy’s essay by his superior and withholding publication delaying it till 1964. I wonder what the impact would have been, if this paper was published and available in 1953 to the Dartmouth Summer Project organized by Shannon, Minsky, Rochester, and McCarthy

It is remarkable that Turing predicted what areas are suitable and had an opinion about what would be most impressive.

“What can be done with a ‘brain’ which is more or less without a body providing, at most, organs of sight, speech, and hearing. We are then faced with the problem of finding suitable branches of thought for the machine to exercise its powers its powers in. The following fields appear to me to have advantages:

(i) Various games, e.g., chess, noughts and crosses, bridge, poker

(ii) The learning of languages

(iii) Translation of languages

(iv) Cryptography

(v) Mathematics

…. Of the above possible fields, the learning of languages would be the most impressive, since it is the most human of these activities.”.

The thesis is that teaching machines how to perform tasks using programs and data (represented as sequences of symbols) and using neural network models to convert information received from various sources into knowledge have delivered a class of intelligent machinery that has proved to be very valuable in improving processes to enable communication, collaboration conducting commerce at scale with efficiency. Obviously, we have made tremendous progress in all the five areas mentioned by Turing.

In addition, we have also made progress in making machines that roam the countryside in the form of autonomous vehicles, robots and drones without posing danger to ordinary citizen unless it is intended by the users of these intelligent machinery.

The antithesis is that the progress also brings unwanted or undesired side effects in the form of using AI to impact ordinary citizen’s freedom, privacy, safety, and survival. While some of the issues are the makings of human greed, propensity of few to control the lives of many, and the good old conflict between the good and the evil, the technological progress with both the general-purpose computers, and AI machines have accelerated the pace for both the good and the evil and increased the gap between the haves and the have-nots. The unbridled power wielded by the state in collusion with big-tech is wreaking havoc on free-speech and threatening democracies with selective abuse of technology. Fake news, fraud, invasion of individual privacy and security are accelerated and committed at a scale that was not possible before the Internet and AI.

The shortcomings of current generation half-brained AI and the limits of symbolic computing based cognitive structures are well documented.

Information | Free Full-Text | A New Class of Autopoietic and Cognitive Machines (mdpi.com)

BDCC | Special Issue : Data, Structure, and Information in Artificial Intelligence (mdpi.com)https://tfpis.com/wp-content/uploads/2022/07/iiai-aai-paper-general-theory-of-information.pdf)

In addition, as John von Neumann[2] pointed out in 1948, “It is very likely that on the basis of philosophy that every er-ror has to be caught, explained, and corrected, a system of the complexity of the living organism would not last for a millisecond. Such a system is so integrated that it can operate across errors. An error in it does not in general indicate a degenerate tendency. The system is sufficiently flexible and well organized that as soon as an error shows up in any part of it, the system automatically senses whether this error matters or not. If it doesn’t matter, the system continues to operate without paying any attention to it. If the error seems to the system to be important, the system blocks that region out, by-passes it, and proceeds along other channels. The system then analyzes the region separately at leisure and corrects what goes on there, and if correction is impossible the system just blocks the region off and by-passes it forever. The duration of operability of the automation is determined by the time it takes until so many incurable errors have occurred, so many alterations and permanent by-passes have been made, that finally the operability is really impaired. This is completely different philosophy from the philosophy which proclaims that the end of the world is at hand as soon as the first error has occurred.”

This “self-regulation” behavior exhibited by biological systems are made possible by cell replication, and metabolism using energy and matter transformations. The knowledge to replicate cells, use them to build cognitive apparatuses, sense and process information using several mechanisms, and use the knowledge to execute life processes is encoded in the genome.

According to Wikipedia “The human genome is a complete set of nucleic acid sequences for humans, encoded as DNA within the 23 chromosome pairs in cell nuclei and in a small DNA molecule found within individual mitochondria. These are usually treated separately as the nuclear genome and the mitochondrial genome.[4] Human genomes include both protein-coding DNA sequences and various types of DNA that does not encode proteins. The latter is a diverse category that includes DNA coding for non-translated RNA, such as that for ribosomal RNA, transfer RNA, ribozymes, small nuclear RNAs, and several types of regulatory RNAs. It also includes promoters and their associated gene-regulatory elements, DNA playing structural and replicatory roles, such as scaffolding regions, telomeres, centromeres, and origins of replication, plus large numbers of transposable elements, inserted viral DNA, non-functional pseudogenes and simple, highly-repetitive sequences. Introns make up a large percentage of non-coding DNA. Some of this non-coding DNA is non-functional junk DNA, such as pseudogenes, but there is no firm consensus on the total amount of junk DNA.

“Human body is a building made from trillions of building blocks called cells. Cells exchange nutrients and chemical signals. Each cell is akin to a tiny factory, with different types of cells performing specialized functions, all of which contribute to the working of the entire body.”

For a more detailed discussion of the society of genes and how they organize themselves to build the autopoietic and cognitive system, see the book “The Society of Genes”

[Yanai, Itai; Martin, Lercher. The Society of Genes (p. 11). Harvard University Press. Kindle Edition.’]

The cognitive processes both wired in the genome and learned using the cognitive apparatuses the biological system provide a unique sense of the self and are pivotal for the intelligent behavior that allows the system to manage itself and its interactions with the external universe.

As Damasio [3] points out “Humans have distinguished themselves from all other beings by creating a spectacular collection of objects, practices, and ideas, collectively known as cultures. The collection includes the arts, philosophical inquiry, moral systems and religious beliefs, justice, governance, economic institutions, and technology and science. Why and how did this process begin? A frequent answer to this question invokes an important faculty of the human mind—verbal language—along with distinctive features such as intense sociality and superior intellect. For those who are biologically inclined the answer also includes natural selection operating at the level of genes. I have no doubt that intellect, sociality, and language have played key roles in the process, and it goes without saying that the organisms capable of cultural invention, along with the specific faculties used in the invention, are present in humans by the grace of natural selection and genetic transmission. The idea is that something else was required to jump-start the saga of human cultures. That something else was a motive. I am referring specifically to feelings, from pain and suffering to well-being and pleasure. …”

This leads us to the question – What are the limitations of intelligent humans and today’s intelligent machines? How can we compensate for the frailties of humanity and improve the intelligence of machines to assist human intelligence in building a better societal consciousness and culture. A synthesis can only occur if we develop a deeper understanding of how human intelligence has evolved through natural selection, how it is passed on from the survivors to successors, and how biological intelligence processes information received through their cognitive apparatuses and uses the knowledge to execute behaviors that are considered intelligent and assist in securing their stability, safety, and survival while the systems pursue the goals defined in their wired and learned life processes.

Back to the Basics

Philosophers from the East and the West have been pondering the true nature of the Material and Mental Worlds and their relationships. Between the 8th and 6th B.C.E., Samkhya philosophy advocated two realities, Prakriti, matter, and Purusha, self. In China, Confucius (551 – 479 B.C.E.) focused on knowledge consisting of two types: one was innate, while the other one was from learning. In ancient Greece, Heraclitus (500 B.C.E) acknowledged the existence of the material world but emphasized that it is constantly changing.

Plato (427-347 B.C.E.) admitted the existence of an external world but came to the conclusion that the world perceived by the senses is changing and unreliable. He maintained that the true world is the world of ideas, which are not corruptible. This world of ideas is not accessible to the senses, but only to the mind. He proposed Ideas/Forms as a more general system of abstractions. Aristotle, Plato’s student on the other hand, not only affirmed the existence of the real world but also maintained that our ideas of the world are obtained by abstracting common properties of the material objects the senses perceive. Coming to the more recent philosopher, Rene Descartes (1596-1650) believed that the external world was real, and objective reality is indirectly derived using the senses. He classified his observations of the material objects into two classes, primary and secondary. Motion is classified as primary, and the color is secondary. Hobbs (1650) proposed that ideas are images or memories received through the senses. He did not believe in ideas and postulated that we reason using symbols and names for experiences.

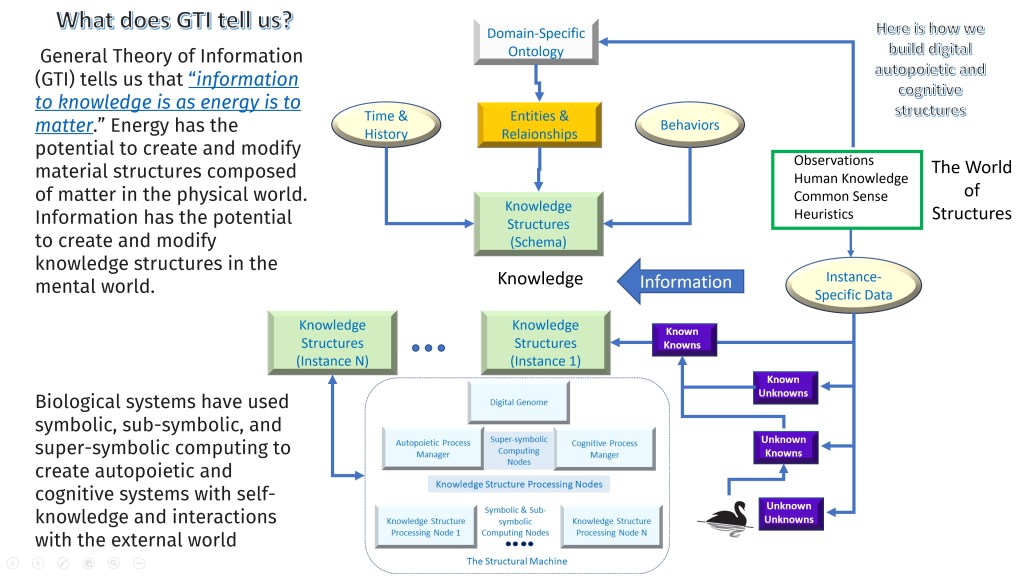

These concepts are well reviewed by Prof. Burgin in his book “Theory of Knowledge“, and his articulation of the general theory of information (GTI), and the theory of structural reality brings together with a scientific interpretation many of the observations of these philosophers and provides a theory that integrates our understanding of the material and mental worlds using the world of ideal structures as the underlying foundation. According to GTI, the existential triad consists of:

- The material world deals with energy, matter, and their interactions resulting in the formation of material structures in space and their evolution in time. The material world is governed by the laws of nature (which we observe and model using the mental structures of physics and mathematics).

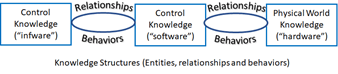

- The mental world perceives the state of material structures in space, and their evolution in time in the form of information, and is stored as knowledge in the form of mental structures. The mental structures use the ideal structures in the form of fundamental triads (namespaces) that assign labels for the entities, and use the knowledge about their relationships and evolutionary behaviors.

- The world of ideal structures provides a representation of material and mental structures in the form of labels of observed physical or mental entities, their attributes, relationships, and their behavioral evolution in time. The fundamental trial, therefore, allows the representation of material and mental structures in space and time.

In short, material structures are formed and evolve based on energy and matter transformations. The ideal structures provide a mechanism not only to represent the material structures. An observer can use the observations received as information and create mental structures not only to represent the observations but also use other structures to reason and use physical structures to interact with the material world.

Structures in the form of the fundamental triads provide the means to create knowledge from information and use it to reason and interact with material structures.

In essence, information is to knowledge as Energy is to matter. Energy has the potential to create or modify material structures. Material Structures carry information that observers can receive using various senses and create knowledge in the form of mental structures. The mental structures allow the observer to model and reason about the observations using ideal structures (which include various entities, their relationships and their dynamic behaviors) and interact with the material world. The observations include the state of the material structures and their dynamics as they change based on the interactions within and with the external world.

Information carried by the material structures is the bridge between the material and the mental worlds. Information, therefore, takes the material or mental forms and provides descriptions of the structures in the form of their state and evolutionary behaviors in time.

The mental structures provide the means to create the processes of creating representations of observations, abstractions reasoning, and inferencing such s deduction, induction, and abduction. Mathematics is created as mental structures that are composed of fundamental triads consisting of symbols and names or labels.

How is this related to understanding intelligence both natural and artificial? GTI provides tools to model the autopoietic and cognitive behaviors of biological systems and also to infuse autopoietic and cognitive behaviors into digital automata. In addition, it provides a deep understanding of information and knowledge and how they are related to energy and matter. Understanding the difference between ontological information contained in the material universe and the epistemological information perceived by the biological systems as observers (which depends on the cognitive apparatuses that the observer brings to receive and process information) is necessary to understand how the observers interact with the universe and with each other. Language is one carrier of information that is invented to facilitate communication between the observers acting as senders and receivers.

What is Language?

While volumes have been written about language in many languages and many experts are using AI to automate language processing to extract knowledge using machines to gain insights with the hope to match human ability, it is equally important to reexamine what the role of language is, given the new understanding of information and information carriers from GTI.

According to GTI, language whether spoken or written is a well-structured mental carrier of information that describes the state and dynamics of various material or mental structures in the form of fundamental triads. A fundamental triad as we have seen in the above discussion is an ideal structure describing its state and evolution in terms of various entities, their relationships, and the behaviors that evolve the state when various events cause fluctuations. Fundamental triads are composable structures describing the information of a system’s state and its evolution. Language is purely a mental structure conceived by biological systems using their neural networks which receive and process information into knowledge also in the form of structures. Information is materialized by the physical structures of biological systems in various forms as carriers of information and communicated as sequences of sounds or sequences of symbols. Each individual’s mental structures are trained (over a life-time using various means) to create, communicate and process information into executable knowledge in the form of mental structures. If we accept this thesis, it opens up a new way to process language using machines. Language represents materialized information composed of fundamental triads representing a specific domain or system which contains various labeled entities, their relationships, and the evolution of the system where various interactions change the structures. The ontology of the domain provides a model of the labeled entities and the relationships. GTI provides a schema and defines operations on them to model the knowledge structures. Combining the ontologies and the operations we can create a schema that represents the knowledge structures the language carries as structures of the domain. Natural language processing algorithms can then read the specific text to populate the schema with various instances.

What are, Sentience, Consciousness, Self-Awareness, and Sapience?

According to GTI, sentience, consciousness, self-awareness, and sapience (wisdom or insight) are the outcomes of cognitive behaviors exhibited by the biological structures in the form of a body made up of material structures and a brain or a nervous system made up of neurons. They are the result of the states of a “self”, interactions with the external world, and the history of its state evolution. The “self” appears at various levels of organization of the system composed of autonomous process execution nodes communicating with each other. These outcomes are unique to each individual living being. They can express themselves using information carriers like language or gestures etc. Information is the description of a mental or physical structure described in terms of the fundamental triads. Information is materialized or mentalized by the physical structures and communicated using the information carriers.

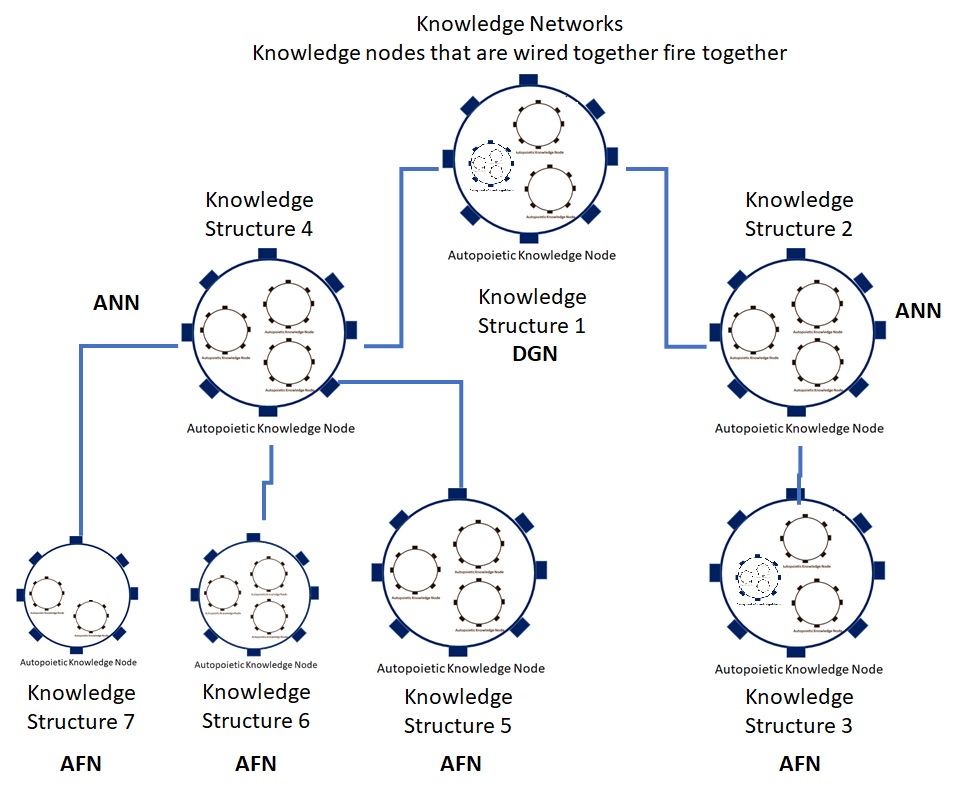

Using the tools of GTI, the cognitive behaviors can be modeled as a multi-layer knowledge network where the functional nodes are grouped to execute the cognitive behaviors in the form of local functional nodes, clusters of functional nodes, and a global knowledge network. Information received through the senses is processed by neural networks and nodes that are fired together wire together to capture the state and evolution of the structures the information describes. The functional nodes that are wired together fire together to exhibit cognitive behaviors.

The knowledge structures act as functional nodes, clusters of the knowledge structures, and global knowledge networks store the various states of the mind, brain, and body system and their evolution using the life processes specified in the genome.

According to Sean Caroll , there are two factors that define the form and function of the new cellular organism that contains the genetic description. “The development of form depends on the turning on and off of genes at different times and places in the course of development. Differences in form arise from evolutionary changes in where and when genes are used, especially those genes that affect the number, shape, or size of structure.” In addition a class of genetic switches regulates how the genes are used and play a great role in defining the function of the living organism.

[S. B. Caroll, “The New Science of Evo Devo—Endless Forms Most Beautiful,” W. W. Norton & Co., New York, 2005.]

The evolution of the state is non-Markovian and depends not only on the events that are influencing the evolution at any particular instant but also on the history that is relevant to the cognitive behavior. This is in contrast to current symbolic computation using digital computers which is Markovian. A Markov process assumes that the next state of a process only depends on the present state and not the past states.

The knowledge network involved in cognitive behaviors is unique to each individual and state history is managed by the life processes derived from the genome.

How are Sentient Structures Different from Material Structures?

Material structures are formed through energy and matter interactions obeying the laws of physics. Their structures composed of constituent components are subject to fluctuations caused by external forces and their evolution is determined by the laws of physics and thermodynamics. For example, the changes in the structure with a collection of iron filings are affected by an external magnetic force applied. When the fluctuations cause large changes in its components within the structure, it goes through phase transitions that minimize the entropy of the structure.

The behavior of material structures interacting with external forces obeys the laws of physics dealing with the transformation of matter and energy. However, when various structures come together, their components undergo changes based on the interactions of these structures also under the influence of the laws of physics. These interactions cause fluctuations in individual state evolution and if the interactions are strong and fluctuations are large, the individual structures experience a non-equilibrium condition where the entropy and the energy of the whole system change. The system (called the complex adaptive system, CAS) consists of autonomous individual structures undergoing structural changes and adapting to their environment consisting of other structures. For example, when the element Phosphorus comes close to oxidants, halogens, some metals, nitrites, sulfur, and many other compounds, it reacts violently causing an explosion hazard. Other systems when fluctuations drive the system to far from equilibrium states, undergo emergence that could change their state. The outcome is not self-managed but is an emergent outcome based on state transitions from one energy minimum to another.

CAS exhibits self-organization through emergence, non-linearity, the transition between states of order and chaos. The system often exhibits behavior that is difficult to explain through an analysis of the system’s constituent parts. Such behavior is called emergent. CAS are complex systems that can adapt to their environment through an evolution-like process and are isomorphic to networks (nodes executing specific functions based on local knowledge and communicating information using links connecting the edges). The system evolves into a complex multi-layer network, and the functions of the nodes and the composed structure define the global behavior of the system as a whole. Sentience, resilience, and intelligence are the result of these structural transformations and dynamics exhibiting autopoietic and cognitive behaviors. Autopoiesis here refers to the behavior of a system that replicates itself and maintains identity and stability while facing fluctuations caused by external influences. Cognitive behaviors model the system’s state, sense internal and external changes, analyze, predict and take action to mitigate any risk to its functional fulfillment.

However, when the structure experiences large fluctuation in the interactions, the non-equilibrium dynamics drive the state into a new structure based on the emergent behavior which is non-deterministic. The outcome may or may not be the best for the structure’s stability and sustenance. When a large number of structures start interacting together, the laws of thermodynamics influence the microscopic and macroscopic behaviors of these structures. According to the first law of thermodynamics, if the energy of the system consisting of structures that are interacting with each other is conserved, it would reach equilibrium and the structures would tend to be stable. The second law of thermodynamics states that if a closed system is left to itself, it tends to increase disorder and entropy, which is a measure of the disorder. However, if the system can exchange energy with the environment outside, it can increase its order by decreasing entropy inside and transferring it to the outside. This allows the structures to use energy from outside and form more complex structures with lower entropy or higher order. It seems that living organisms have evolved from the soup of physical and chemical structures in three different phases, where the characteristics of the evolving systems are different. In all phases, evolution involves complex multi-layer networks. According to Westerhoff et al., “Biological networks constitute a type of information and communication technology (ICT): they receive information from the outside and inside of cells, integrate and interpret this information, and then activate a response. Biological networks enable molecules within cells, and even cells themselves, to communicate with each other and their environment. (https://www.frontiersin.org/articles/10.3389/fmicb.2014.00379/full )

Conclusion

It is illuminating to learn about the worlds I, as an individual, live in. The material world and the mental worlds I live in deal with matter, energy, knowledge, and information. The digital world is an extension of the material world, to which the meaning is given by the mental world. It assigns meaning to what the physical structures such as computers networks, storage etc. produce. The information contained in the digital world enhances the mental world creating the virtual world. With this picture we will start to understand various entities we interact with and their relationships and their evolution. Hopefully, this knowledge helps us to understand the contemporary human societies we live in and allows us to improve our behaviors to enjoy the finite time we have in the material world. It is interesting to realize that each one of us is a unique entity born at t=0, but our footprints continue to exist till eternity, in a multitude of information carriers even as we cease to be a living system when we succumb to the inevitable death.

Food for thought.

Acknowledgement

Many people I have interacted with, have contributed to my interpretation of the material, mental and digital worlds I live in. Of particular note, special persons who deepened my knowledge are shown here.”

[3] Damasio, Antonio R. The Strange Order of Things (pp. 3-4). Knopf Doubleday Publishing Group. Kindle Edition.

[2] W. Aspray and A. Burks, “Papers of John von Neumannon Computing and Computer Theory,” MIT Press, Cam- bridge, 1989

[1] “Because of the “all-or-none” character of nervous activity, neural events and the relations among them can be treated by means of propositional logic.” The 1943 paper of McCulloch and Pitts lays the foundation for deep learning where neurons fired together are wired together to represent knowledge from the epistemic information received from observations in biological systems.